S3 Read API#

Sanhe

Apr 20, 2023

15 min read

What is S3 Read API#

AWS S3 provides wide range of APIs, but some of these functions only retrieve information from the server without changing the state of the S3 bucket (e.g. no files are moved, changed, or deleted). Unlike Write API functions, using Read API functions improperly will NOT cause any negative impact. Therefore, it is recommended to start by exploring the Read API functions before diving into the Write API.

Configure the AWS Context object#

Before you can run any AWS API, you must first authenticate. The Context is a singleton object that manages authenticated sessions.

To get started, it’s necessary to configure AWS CLI credentials on your local machine. If you’re unsure how to do this, you can follow this official guide provided by AWS.

Once it’s done, you can run the following command to test your authentication.

[4]:

!aws sts get-caller-identity

{

"UserId": "ABCDEFABCDEFABCDEFABC",

"Account": "111122223333",

"Arn": "arn:aws:iam::111122223333:user/johndoe"

}

The Context object stores a pre-authenticated boto session, which is created using your default credentials (if available). However, you can also configure a custom boto session yourself and attach it to the context.

[2]:

import boto3

from s3pathlib import context

context.attach_boto_session(

boto3.session.Session(

region_name="us-east-1",

profile_name="my_aws_profile",

)

)

When s3pathlib making AWS API calls, it prioritize to use the boto session stored in the Context object. However, you can always explicitly pass in a custom boto session to the API call if needed.

[10]:

from s3pathlib import S3Path

from boto_session_manager import BotoSesManager

bsm = BotoSesManager(

region_name="us-east-1",

profile_name="my_aws_profile",

)

s3path = S3Path("s3://my-bucket/test.txt")

_ = s3path.write_text("hello world", bsm=bsm) # explicit pass the boto session

If you are running the code from Cloud machine like AWS EC2 or AWS Lambda, follow this official guide to grant your computational machine proper AWS S3 access.

Get S3 Object Metadata#

An object consists of data and its descriptive metadata. s3pathlib provides a user-friendly interface for accessing object metadata without needing to explicitly invoke the API. Additionally, it automatically caches the underlying head_object API response for improved performance.

[5]:

s3path = S3Path("s3://s3pathlib/test.txt")

s3path.write_text("hello world" * 1000) # create a test object

[5]:

S3Path('s3://s3pathlib/test.txt')

[14]:

s3path.etag

[14]:

'4d5d1cba9eb18884a5410f4b83bc6951'

[15]:

s3path.last_modified_at

[15]:

datetime.datetime(2023, 4, 20, 7, 1, 13, tzinfo=tzutc())

[16]:

s3path.size

[16]:

11000

[17]:

s3path.size_for_human

[17]:

'10.74 KB'

[18]:

s3path.version_id

[18]:

'null'

[23]:

print(s3path.expire_at)

None

Note

Metadata is cached only once, either when it’s first accessed or when the get_object API is called. The cache is not automatically refreshed and cannot detect server-side changes. To obtain the latest server-side metadata value, you can use the clear_cache() method to clear the cache. The latest data will be retrieved on the next attempt to access the metadata.

please see the following example.

[26]:

# Create a test file

s3path = S3Path("s3://s3pathlib/file-with-metadata.txt")

s3path.write_text("hello world", metadata={"creator": "s3pathlib"})

print(s3path.size)

print(s3path.metadata)

11

{'creator': 's3pathlib'}

[27]:

# The server side data is changed

s3path.write_text("hello charlice", metadata={"creator": "charlice"})

# You still see the old data

print(s3path.size)

print(s3path.metadata)

11

{'creator': 's3pathlib'}

[28]:

# After you clear the cache, you got the latest data

s3path.clear_cache()

print(s3path.size)

print(s3path.metadata)

14

{'creator': 'charlice'}

Check if Object or Directory Exists#

Check the Existence Of An Object#

You can check if an S3 bucket exists using the exists() method.

[32]:

S3Path("s3pathlib").exists()

[32]:

True

[33]:

S3Path("a-bucket-never-exists").exists()

[33]:

False

You can check if an S3 object exists also.

[34]:

S3Path("s3://s3pathlib/a-file-never-exists.txt").exists()

[34]:

False

[35]:

s3path = S3Path("s3://s3pathlib/test.txt")

s3path.write_text("hello world")

s3path.exists()

[35]:

True

S3 Versioning is a feature to preserve, retrieve, and restore every version of every object stored in your buckets. s3pathlib Also support checking existence of “an object (the latest version)” or “a specific version”.

[40]:

# the s3pathlib-versioning-enabled bucket enabled versioning

s3path = S3Path("s3://s3pathlib-versioning-enabled/test.txt")

# prepare some test data

v1 = s3path.write_text("v1").version_id # add v1

v2 = s3path.write_text("v2").version_id # add v2

s3path.delete() # add a delete marker on v2

[40]:

S3Path('s3://s3pathlib-versioning-enabled/test.txt')

[41]:

# the object (latest) is considered as "not exists" since the latest version is marked as "deleted"

s3path.exists()

[41]:

False

[42]:

s3path.exists(version_id=v1) # but the older version is considered as exists

[42]:

True

[43]:

s3path.read_text(version_id=v1) # verify that it is really the older version

[43]:

'v1'

Check The Existence of A Directory#

As an S3 directory is a logical concept and often doesn’t physically exist, its exists() method will return True only if there is at least one object within the directory or if the directory is a hard folder (an empty object with a trailing “/”).

[44]:

# at begin, the folder not exists because there's no file in it

s3dir = S3Path("s3://s3pathlib/soft-folder/")

s3dir.exists()

[44]:

False

[45]:

# after creating a file in it, even though it is not a hard folder, it is still considered as "exists"

s3dir.joinpath("file.txt").write_text("hello world")

s3dir.exists()

[45]:

True

[46]:

# at begin, the hard folder not exists, because we haven't created it yet

s3dir = S3Path("s3://s3pathlib/hard-folder/")

s3dir.exists()

[46]:

False

[48]:

# after creating a hard folder, now it exists

s3dir.mkdir(exist_ok=True)

s3dir.exists()

[48]:

True

[50]:

# and you can see that the hard folder is just an empty object with trailing "/" in the S3 key

s3dir.read_text()

[50]:

''

[49]:

# and there is no object in it

s3dir.count_objects()

[49]:

0

You cannot check existence for Void path and Relative path, because they are logical concepts.

Count Number of Objects and Total Size in a Directory#

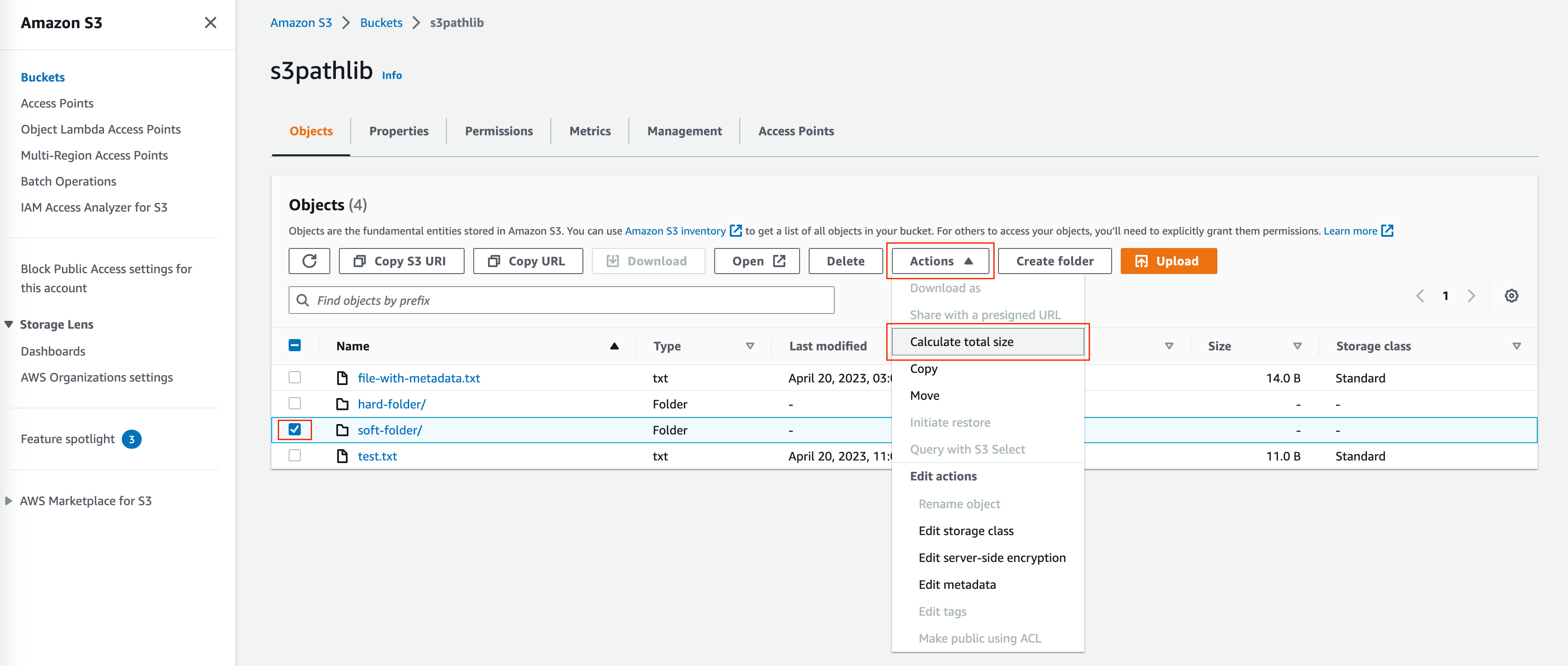

AWS Console has a button “Calculate Total Size” tells you how many objects and the total size in a S3 folder. calculate_total_size() and count_objects() can do that too.

[62]:

s3dir = S3Path("s3://s3pathlib/calculate-total-zie/")

s3dir.mkdir(exist_ok=True) # this is a hard folder and actually exists

s3dir.joinpath("file1.txt").write_text("Hello Alice\n" * 1000)

s3dir.joinpath("file2.txt").write_text("Hello Bob\n" * 1000)

s3dir.joinpath("file3.txt").write_text("Hello Cathy\n" * 1000)

[62]:

S3Path('s3://s3pathlib/calculate-total-zie/file3.txt')

[63]:

s3dir.calculate_total_size()

[63]:

(3, 34000)

[64]:

s3dir.calculate_total_size(for_human=True)

[64]:

(3, '33.20 KB')

[66]:

# since we "include folder", so it returns 4 (one hard folder and three objects)

s3dir.count_objects(include_folder=True)

[66]:

4

List and Filter Objects#

In a file system, it is very common to:

list all sub-folders and files in the current directory, not recursively.

recursively travel through all sub-folders and files.

filter folder and files by user-defined criteria.

S3Pathlib provides a user-friendly interface to do so.

List Objects#

The iter_objects() method is the core API for listing and filtering S3 objects (not directories). It supports the following arguments:

batch_size: an integer, the number of S3 objects returned per API call. Internally, it makes pagination API calls to iterate through all S3 objects. A large batch size can reduce the total number of API calls and improve performance.limit: an integer, limits the number of objects you want to return.recursive: default toTrue, and goes through subfolders as well. However, you can set it toFalseto only iterate through the top-level folder.it go through sub folder too. But you can set to

Falseto go through top level folder onlyinclude_folder: defaults toFalse. If set toTrue, it also returns empty S3 objects that end with a trailing/, which are considered as folders in the S3 console.

[92]:

# first, let's prepare some test data

s3dir = S3Path("s3://s3pathlib/list-objects/")

s3dir.joinpath("README.txt").write_text("read me please") # 1

s3dir.joinpath("logo.png").write_bytes(b"01010101" * 1000) # 2

s3dir.joinpath("folder/data1.json").write_text('{"name": "alice"}') # 3

s3dir.joinpath("folder/data2.json").write_text('{"name": "bob"}') # 4

s3dir.joinpath("folder/subfolder/config.ini").write_text('this is a config file') # 5

s3dir.joinpath("folder/logs/day1.txt").write_text("Hello Alice\n" * 1000) # 6

s3dir.joinpath("folder/logs/day2.txt").write_text("Hello Bob\n" * 1000) # 7

s3dir.count_objects()

[92]:

7

[93]:

for s3path in s3dir.iter_objects():

print(s3path)

S3Path('s3://s3pathlib/list-objects/README.txt')

S3Path('s3://s3pathlib/list-objects/folder/data1.json')

S3Path('s3://s3pathlib/list-objects/folder/data2.json')

S3Path('s3://s3pathlib/list-objects/folder/logs/day1.txt')

S3Path('s3://s3pathlib/list-objects/folder/logs/day2.txt')

S3Path('s3://s3pathlib/list-objects/folder/subfolder/config.ini')

S3Path('s3://s3pathlib/list-objects/logo.png')

[94]:

for s3path in s3dir.iter_objects(recursive=False):

print(s3path)

S3Path('s3://s3pathlib/list-objects/README.txt')

S3Path('s3://s3pathlib/list-objects/logo.png')

The iter_objects() actually returns a S3PathIterProxy object. This is a user-friendly iterable Python object that allows you to iterate over a subset of the returned data instead of loading everything into memory in one shot. It also provides additional features such as pagination, skipping, getting one or none, and custom filtering.

[95]:

# Create proxy

proxy = s3dir.iter_objects()

[96]:

# Get one item

proxy.one()

[96]:

S3Path('s3://s3pathlib/list-objects/README.txt')

[97]:

# Get many items

proxy.many(2)

[97]:

[S3Path('s3://s3pathlib/list-objects/folder/data1.json'),

S3Path('s3://s3pathlib/list-objects/folder/data2.json')]

[98]:

# Skip some items

proxy.skip(1) # s3://s3pathlib/list-objects/folder/data1.json is skipped

proxy.one()

[98]:

S3Path('s3://s3pathlib/list-objects/folder/logs/day2.txt')

[99]:

# Get the rest of items

proxy.all()

[99]:

[S3Path('s3://s3pathlib/list-objects/folder/subfolder/config.ini'),

S3Path('s3://s3pathlib/list-objects/logo.png')]

[100]:

# Get one item or none

print(proxy.one_or_none())

None

[101]:

# Load everything into a list in one shot

s3dir.iter_objects().all()

[101]:

[S3Path('s3://s3pathlib/list-objects/README.txt'),

S3Path('s3://s3pathlib/list-objects/folder/data1.json'),

S3Path('s3://s3pathlib/list-objects/folder/data2.json'),

S3Path('s3://s3pathlib/list-objects/folder/logs/day1.txt'),

S3Path('s3://s3pathlib/list-objects/folder/logs/day2.txt'),

S3Path('s3://s3pathlib/list-objects/folder/subfolder/config.ini'),

S3Path('s3://s3pathlib/list-objects/logo.png')]

Filter Objects#

Filtering by Attributes#

s3pathlib provides a SQL liked interface that allows you to filter the object by their attributes. Below is the full list of built-in attributes can be used for filtering:

S3Path.bucket: strS3Path.key: strS3Path.uri: strS3Path.arn: strS3Path.parts: list[str]S3Path.basename: strS3Path.fname: strS3Path.ext: strS3Path.dirname: strS3Path.dirpath: strS3Path.abspath: strS3Path.etag: strS3Path.size: intS3Path.last_modified_at: datetimeS3Path.version_id: strS3Path.expire_at: datetime

[102]:

# filter by file extension

for s3path in s3dir.iter_objects().filter(S3Path.ext == ".json"):

print(s3path)

S3Path('s3://s3pathlib/list-objects/folder/data1.json')

S3Path('s3://s3pathlib/list-objects/folder/data2.json')

[118]:

# filter by file extension

for s3path in s3dir.iter_objects().filter(S3Path.size >= 1000):

print(s3path, s3path.size)

S3Path('s3://s3pathlib/list-objects/folder/logs/day1.txt') 12000

S3Path('s3://s3pathlib/list-objects/folder/logs/day2.txt') 10000

S3Path('s3://s3pathlib/list-objects/logo.png') 8000

Filtering by Comparator#

Comparator is just a function to construct the filtering criteria for you.

[117]:

for s3path in s3dir.iter_objects().filter(S3Path.size.between(1_000, 1_000_1000)):

print(s3path, s3path.size)

S3Path('s3://s3pathlib/list-objects/folder/logs/day1.txt') 12000

S3Path('s3://s3pathlib/list-objects/folder/logs/day2.txt') 10000

S3Path('s3://s3pathlib/list-objects/logo.png') 8000

[121]:

for s3path in s3dir.iter_objects().filter(S3Path.basename.startswith("data")):

print(s3path)

S3Path('s3://s3pathlib/list-objects/folder/data1.json')

S3Path('s3://s3pathlib/list-objects/folder/data2.json')

[122]:

for s3path in s3dir.iter_objects().filter(S3Path.abspath.contains("subfolder")):

print(s3path)

S3Path('s3://s3pathlib/list-objects/folder/subfolder/config.ini')

See also

Below is the full list of built-in comparators:

Logical Operator#

If you want to use multiple criteria, the filter() method takes multiple positioning arguments and join them with logic AND automatically.

[108]:

for s3path in s3dir.iter_objects().filter(S3Path.ext == ".txt", S3Path.size >= 1000):

print(s3path)

S3Path('s3://s3pathlib/list-objects/folder/logs/day1.txt')

S3Path('s3://s3pathlib/list-objects/folder/logs/day2.txt')

The filter() method also can be chained, all chained filters will be joined with logic AND. It uses lazy load technique to evaluate the criteria when the data is returned.

[110]:

for s3path in (

s3dir.iter_objects()

.filter(S3Path.ext == ".txt")

.filter(S3Path.size >= 1000)

):

print(s3path)

S3Path('s3://s3pathlib/list-objects/folder/logs/day1.txt')

S3Path('s3://s3pathlib/list-objects/folder/logs/day2.txt')

The and_, or_, not_ helper functions can define complicated filtering logics.

[113]:

from s3pathlib import and_, or_, not_

for s3path in s3dir.iter_objects().filter(not_(or_(S3Path.ext == ".txt", S3Path.ext == ".png"))):

print(s3path)

S3Path('s3://s3pathlib/list-objects/folder/data1.json')

S3Path('s3://s3pathlib/list-objects/folder/data2.json')

S3Path('s3://s3pathlib/list-objects/folder/subfolder/config.ini')

Custom Filter Function#

You can define your own custom filter function. A filter function is simply a callable function that takes only one argument, a S3Path object, and returns a boolean value to indicate whether we want to keep this object. If it returns False, the S3Path object will not be returned. You can define arbitrary criteria in your filter function.

[114]:

# the size in bytes is odd number

def size_is_odd(s3path: S3Path) -> bool:

return s3path.size % 2

for s3path in s3dir.iter_objects().filter(size_is_odd):

print(s3path, s3path.size)

S3Path('s3://s3pathlib/list-objects/folder/data1.json') 17

S3Path('s3://s3pathlib/list-objects/folder/data2.json') 15

S3Path('s3://s3pathlib/list-objects/folder/subfolder/config.ini') 21

Note

When using filter with limit argument. The iterator yield limit number of items first, then apply filter to the results afterwards. In conclusion, the final number of matched items is usually SMALLER than limit.

Iter Directory#

The iter_objects method only returns objects. If you want to iterate folders and objects all together, you can use the iterdir() method.

[126]:

s3dir.iterdir().all()

[126]:

[S3Path('s3://s3pathlib/list-objects/folder/'),

S3Path('s3://s3pathlib/list-objects/README.txt'),

S3Path('s3://s3pathlib/list-objects/logo.png')]

List Object Versions#

On versioning enabled bucket, you can list all versions of an object by calling the list_object_versions() method. It will return versions in reverse chronological order. The is_delete_marker() method can be used to check if the version is a delete marker.

[13]:

# First, let's prepare some test data

import time

s3path = S3Path("s3pathlib-versioning-enabled/file.txt")

s3path.write_text("v1")

time.sleep(1)

s3path.write_text("v2")

time.sleep(1)

s3path.delete()

time.sleep(1)

s3path.write_text("v3")

time.sleep(1)

s3path.write_text("v4")

time.sleep(1)

s3path.delete()

time.sleep(1)

s3path.write_text("v5")

time.sleep(1)

[16]:

for s3path_versioned in s3path.list_object_versions():

version_id = s3path_versioned.version_id

is_delete_marker = s3path_versioned.is_delete_marker()

try:

content = s3path_versioned.read_text(version_id=version_id)

except Exception as e:

content = "it's a delete marker"

print(f"version_id = {version_id}, is_delete_marker = {is_delete_marker}, content = {content}")

version_id = Fh4t9N2vUelLUa8Z8gSSJSDyKTVOMA19, is_delete_marker = False, content = v5

version_id = Hij7d8MKHqv_RaOimyHHTP3IbX9Dpcp8, is_delete_marker = True, content = it's a delete marker

version_id = 29rFPVkoeNj_SbW28yARvZ9rSps1Lr6P, is_delete_marker = False, content = v4

version_id = wJwrSJy36Wa5vJr4DhBw5T_MuQ3hMvMf, is_delete_marker = False, content = v3

version_id = 8ARcxb.AOTVBKPMjBXzhYX9uk5krGrIV, is_delete_marker = True, content = it's a delete marker

version_id = 36HjB5gG7oBzuS7Iiu7GixMyPKzkIEog, is_delete_marker = False, content = v2

version_id = nm2Zw.jv2yGywNmCVsR5wodrHwfPwTUX, is_delete_marker = False, content = v1

What’s Next#

Now that we’ve learned some examples of using S3 read APIs, let’s move on to the next section to learn how to use S3 write APIs.

[ ]: